Every team that starts experimenting with generative AI (gen AI) eventually runs into the same wall: scaling it. Running 1 or 2 models is simple enough. Managing dozens, supporting hundreds of users, and keeping GPU costs under control is something else entirely.

Teams often find themselves juggling hardware requests, running multiple versions of the same model, and trying to deliver performance that actually keeps up in production. These are the same kinds of infrastructure and operational challenges we’ve seen in other workloads, but now applied to AI systems that require far more resources and coordination.

In this post, we look at 3 practical strategies that help organizations solve these scaling problems:

- GPU-as-a-Service to better utilize expensive GPU hardware

- Models-as-a-Service to give teams controlled and reliable access to shared models

- Scalable distraction with vLLM and llm-d to achieve production grade, runtime performance efficiently

The common challenges of scaling AI

When moving from proof of concept to production, cost quickly becomes the first hurdle. Running large models in production is expensive, especially when training, tuning, and inference workloads are all competing for GPU capacity.

Control is another challenge. IT teams must balance the freedom to experiment with safeguards that strengthen security and compliance. Without proper management, internal developers can make use of unapproved services or external APIs, resulting in shadow AI.

Finally, there is scale. Deploying a few models can be simple, but maintaining dozens of high-parameter models across environments and teams requires automation and stronger observability.

The following 3 strategies directly address these challenges.

1. Optimizing GPU resources with GPU-as-a-service

GPUs are essential for gen AI workloads, but they are both expensive and rare. In many organizations, GPU clusters are distributed across cloud and on-premises environments, often underutilized or misallocated. Provisioning can take days, and it’s difficult to track usage across teams.

These challenges have led many enterprises to adopt GPU-as-a-service, an approach that automates allocation, enforces quotas, and provides visibility into how GPUs are being used across the organization.

Case Study: Turkish Airlines

Turkish Airlines is a strong example of managing GPU access more efficiently. With about 20 data scientists and more than 200 application developers, the company manages more than 50 AI use cases ranging from dynamic pricing to predictive maintenance. Its goal is to generate about $100 million USD in new revenue or cost savings annually.

Already using Red Hat OpenShift, Turkish Airlines implemented Red Hat OpenShift AI to automate GPU provisioning. Instead of filing service tickets and waiting hours or days for access, developers can now launch GPU-enabled environments in minutes. This self-service model eliminates delays while giving administrators full visibility into resource usage and costs.

Demo: Implementation of GPU-as-a-Service

This 10-minute demo shows how GPU-as-a-service can be deployed using OpenShift AI to automate GPU allocation, enforce quotas, and track usage across users, all from a single administrative interface.

2. Improving control with Models-as-a-Service

Automating GPU allocation addresses costs, but many organizations then face the challenge of management. As teams deploy more models, duplication and fragmentation grow. Developers often use their own copies of common open-gen AI models, which consume additional GPUs and create version drift.

A Models-as-a-Service (MaaS) pattern centralizes these efforts. Models like Llama, Mistral, Qwen, Granite, and DeepSeek can be hosted once and served through APIs, giving developers instant access, while platform teams can manage permissions, quotas, and usage from a single control point.

From infrastructure-as-a-service to models-as-a-service

This shift replaces hardware provisioning with standardized model access. Models are centrally deployed and exposed through APIs that developers call directly. Platform engineers monitor utilization, enforce quotas, and can provide internal rollbacks or rollbacks to align resource usage with business priorities.

API-driven model access

Most large language model (LLM) enterprise workloads are already API-based. Red Hat AI Inference Serveravailable throughout the full range of Red Hat AI offerings, exposes an OpenAI-compatible API endpoint, enabling teams to easily integrate with frameworks like LangChain or custom enterprise applications.

If an organization already has an API gateway, it can connect directly to Red Hat AI for authentication and token-based measurement. For those who don’t, Red Hat AI 3 introduces a built-in, token-aware gateway that runs alongside the model within the cluster. Over the Red Hat AI 3 lifecycle, we will deliver capabilities to automatically monitor usage, set limits, and gather analytics without deploying external components.

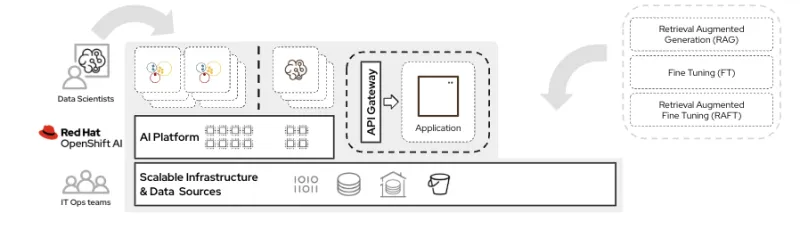

Models-as-a-Service lays the foundation for more advanced use cases such as retrieval-augmented generation (RAG), retrieval-augmented fine-tuning (RAFT), synthetic data generation, and agentic AI systems built on top of shared model endpoints (See Figure 1).

3. Scale derivation with vLLM and llm-d

Even with efficient GPU allocation and model management, inference performance often becomes the bottleneck. LLMs are resource intensive, and maintaining fast response times at scale requires optimized runtimes and sometimes even distributed execution. This challenge is further complicated by the need to serve different use cases on a single model, such as varying large or small contexts and different service level objectives (SLOs) around metrics such as time-to-first-token (TTFT) and inter-token latency (ITL).

The case for vLLM

vLLM has become the preferred open source inference runtime for large models. It offers high throughput and supports multiple accelerator types, enabling enterprises to standardize service across hardware vendors. When combined with model compression and quantizationcan reduce vLLM GPU requirements by 2 to 3 times while maintaining 99% accuracy for models such as Llama, Mistral, Qwen, and DeepSeek.

A large North American financial institution applied this approach to its on-premises deployment of Llama and Whisper models in a disconnected environment. Hosting the workloads on OpenShift AI simplified management, improved data security and delivered the required throughput. To further increase scalability, the team began exploring distributed inferences through llm-d.

Introducing llm-d for distributed inference

The llm-d project extends proven cloud-native scaling techniques to LLMs. Traditional microservices scale easily because they are stateless, but LLM derivation with vLLM does not. Each vLLM instance builds and maintains a key-value (KV) cache during the prefill phase, which is the initial phase where the model processes the entire input request to generate the first token. Recreating that cache on a new pod wastes computation and increases latency.

llm-d introduces intelligent scheduling and routing to avoid that duplication. It separates workloads between the prefill and decode phases so that each runs on hardware optimized for its needs. It also implements prefix-aware routing, which detects when a previous context has already been processed and sends the next request to the pod most likely to hold that cache. The result is faster response times and better GPU efficiency.

Demo: Scaling with llm-d

This 5-minute demo highlights how llm-d uses intelligent routing and cache awareness to improve inference performance. It shows requests automatically routed to cache model instances, significantly reducing time to first draw and improving throughput across GPUs.

To bring it all together

Building scalable AI systems requires more than just adding GPUs. This requires a thoughtful design that balances performance, control and simplicity. The patterns described here help teams do just that.

- GPU-as-a-Service helps you make the most of your hardware by turning it into a shared, policy-controlled resource

- Models-as-a-Service brings order to how models are deployed and accessed as APIs across projects

- vLLM and llm-d making it possible to run those models at production scale without compromising performance or breaking budgets

Together, they help create a practical roadmap for anyone modernizing their AI platform with Red Hat AI. They make it easier to move quickly, stay efficient and scale with confidence.

![[keyword]](https://learnxyz.in/wp-content/uploads/2026/02/Measure-business-impact-with-Dashboard-and-Analytics.jpg)