In the last articlewe discussed how the integration of AI into business-critical systems opens enterprises to a new set of risks with AI security and AI safety. Rather than reinventing the wheel or relying on fragmented, improvised approaches, organizations must build on established standards and best practices to stay ahead of cybercriminals and other adversaries.

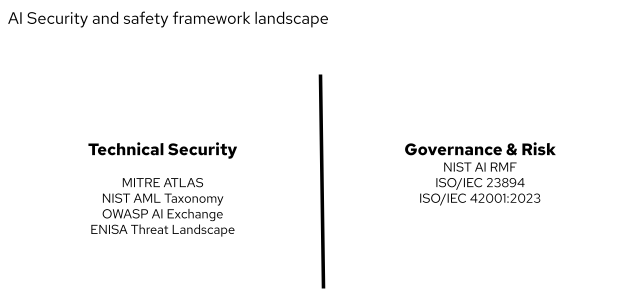

To manage these challenges, enterprises must take a formal approach using a set of frameworks that map AI threats, define controls and guide responsible adoption. In this article, we will examine the evolving AI security and safety threat landscape, using leading efforts such as MITER ATLAS, NIST, OWASP, and others.

Note: Before diving into frameworks, it is important to understand differences between AI security and AI safety. Look at us previous articlewhich provides key features and examples of each.

MITER ATLAS: Mapping AI Threats

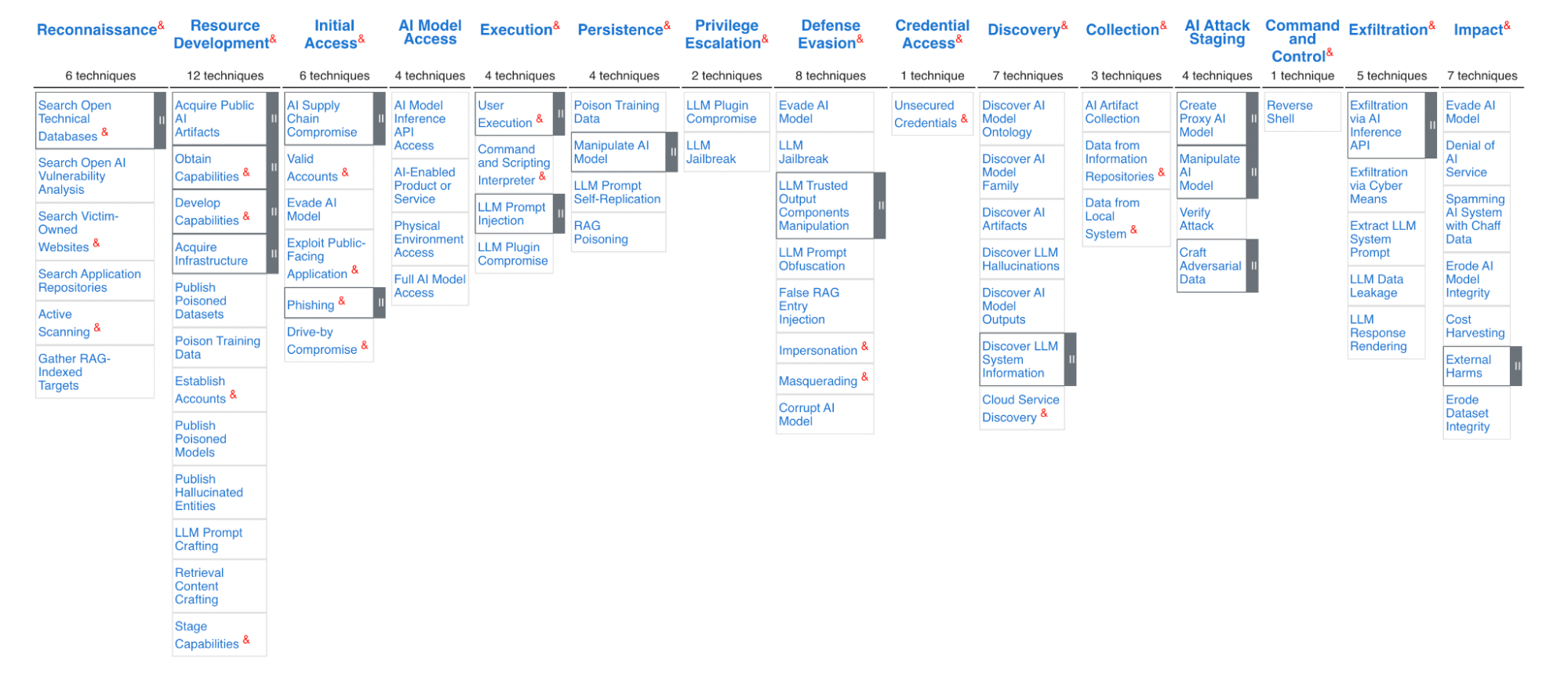

The Adversarial Threat Landscape for Artificial-Intelligence Systems (ATLAS) from MITER is one of the most comprehensive resources for AI-specific attack techniques. Similar to the familiar MITER ATT&CK framework for cybersecurity, ATLAS catalogs tactics, techniques, and procedures that adversaries use to exploit machine learning (ML) systems, including:

- Data poisoning: corrupting training data to manipulate outcomes

- Model evasion: making inputs to trick models into misclassification

- Model theft: replicating one’s own model through repeated queries

Enterprises can use MITER ATLAS to anticipate adversary tactics and integrate AI threat modeling into existing network teaming and penetration testing practices.

NIST AI Risk Management Framework (AI RMF)

The NIST AI RMF provides a structured methodology for managing AI risks across the lifecycle. Its core functions—Map, Measure, Manage, and Manage—help organizations identify risks, measure their likelihood and impact, and put controls in place.

Key considerations include:

- Management practices for reliable AI

- Alignment with ethical principles

- Risk-based prioritization for AI deployments

This framework is particularly useful for enterprises building a holistic AI management program.

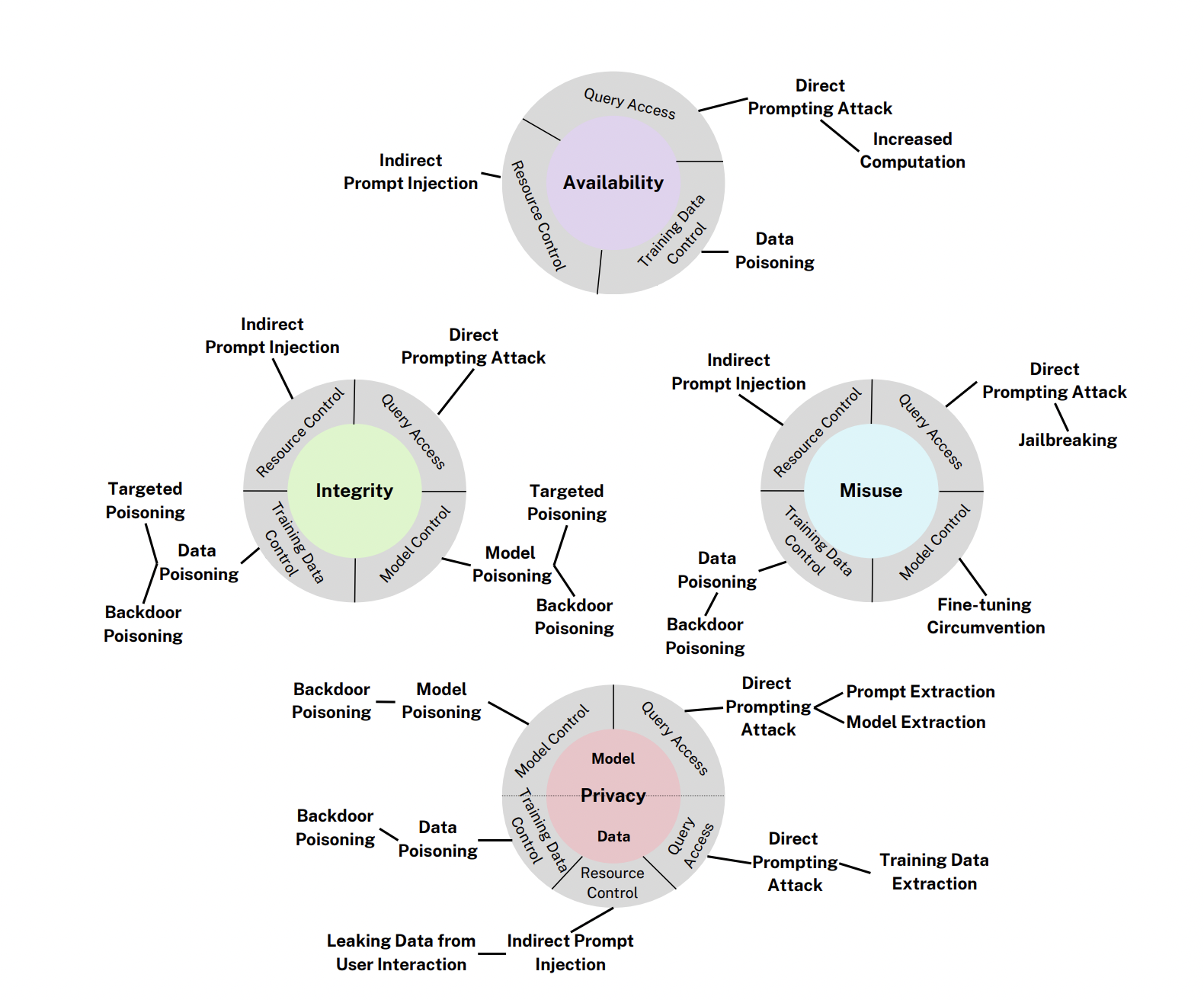

NIST Adversarial Machine Learning (AML) taxonomy

To complement the AI RMF, NIST also offers a AML taxonomy which categorizes different attack surfaces in AI and ML systems. It identifies:

- Evasive attacks during distraction

- Poisoning attacks during training

- Extraction and inversion attacks targeting model confidentiality

This taxonomy helps enterprises translate AI security and AI safety risks into familiar categories for cybersecurity teams.

OWASP AI Exchange

Open Web Application Security Project (OWASP), known for its web security leadership, has launched several initiatives in the AI security and safety space. Two of these are the AI Security and Privacy Guide and the OWASP AI Exchange. These resources focus on security-enhanced AI application development, addressing the following:

- Insecure model configuration

- Supply chain risks in AI pipelines

- AI-specific vulnerabilities in APIs and model endpoints

It is important to highlight 2 documents that are variants of the popular OWASP Top 10 Web Vulnerabilities, but in this case applied to AI security: OWASP Machine Learning Security Top Ten and the OWASP Top 10 for Large Language Modeling Applications. For developers, OWASP provides actionable checklists to incorporate security into the AI software development lifecycle.

ISO/IEC standards for AI

At the international level, ISO/IEC JTC 1/SC 42 develop AI standards covering governance, lifecycle management and risk. ISO/IEC 42001:2023 is the first international standard designed specifically for AI management systems (AISM), as 9001 is for quality management systems (QMS) and 27001 is for information security management systems (ISMS). It provides a structured framework for organizations to responsibly develop, deploy and manage AI systems with a strong emphasis on ethical considerations, risk management, transparency and accountability.

While ISO/IEC 42001:2023 covers the entire AI management system, ISO/IEC 23894:2023 is laser-focused on a comprehensive framework for AI risk management. It complements common risk management frameworks by addressing the unique risks and challenges posed by AI, such as algorithmic bias, lack of transparency and unintended outcomes. This standard supports the responsible use of AI by promoting a systematic, proactive approach to risk, improving trust, safety and compliance with ethical and regulatory expectations.

These standards provide a globally recognized baseline that businesses can adhere to, especially those operating in multiple jurisdictions.

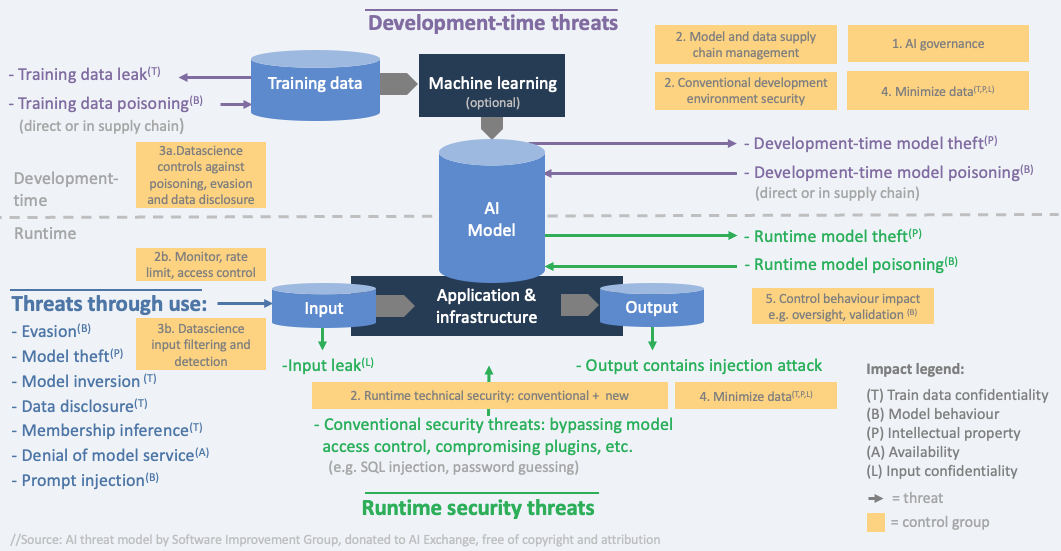

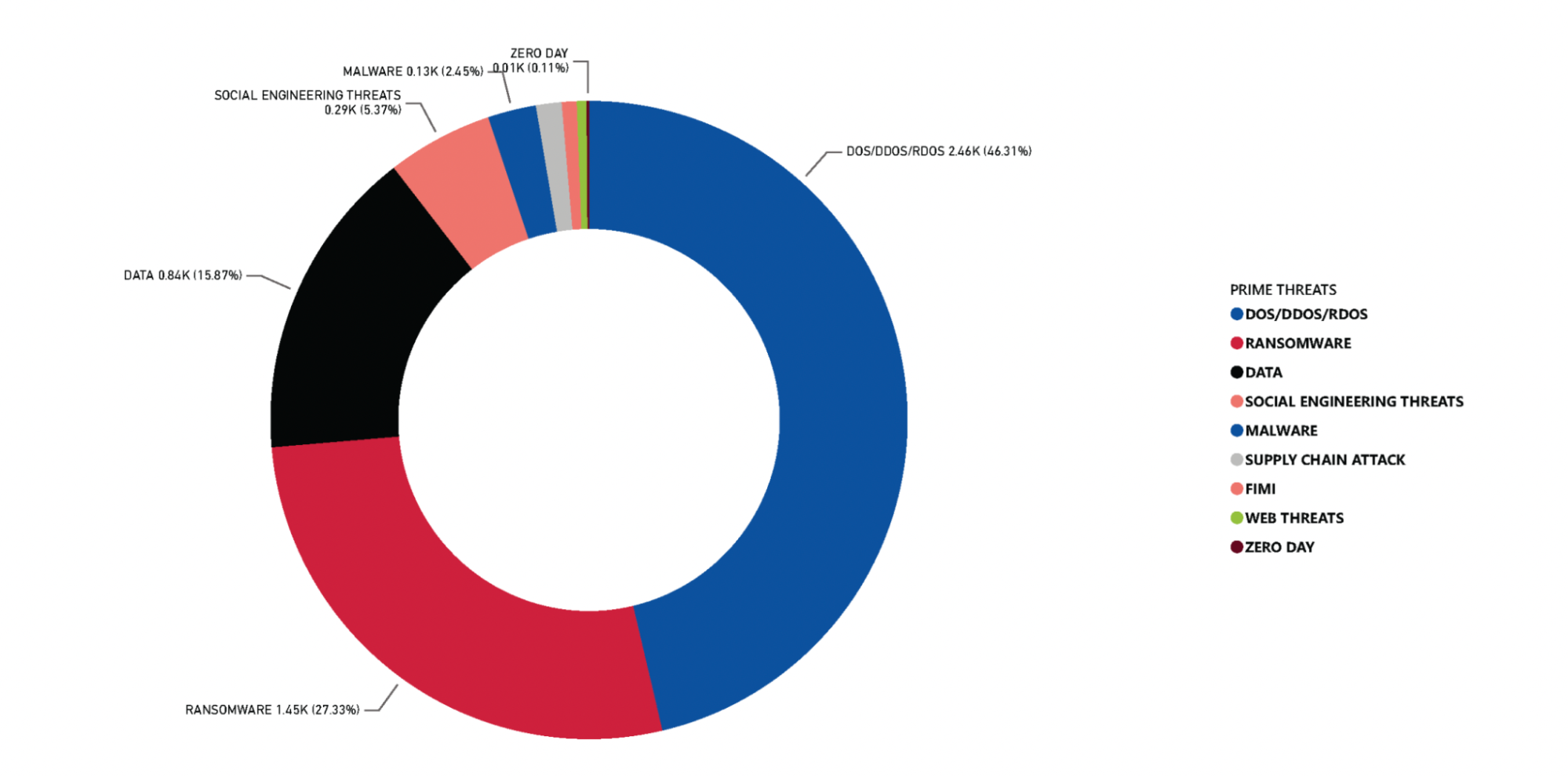

ENISA AI threat landscape

The European Union Agency for Cyber Security (ENISA) has outlined AI-specific threats in its map AI threat landscape. This includes not only adversarial attacks, but also systemic risks such as software supply chain vulnerabilities and ethical abuse.

ENISA’s mapping helps companies connect technical vulnerabilities to broader organizational risks.

Responsible AI standardization

Responsible AI considerations are essential so that AI systems, especially powerful generative models, are developed and deployed in ways that are ethical, transparent, secure and aligned with human values.

In addition to “classic” technical security issues, the rapid development of AI technology brings additional risks, such as misinformation, bias, abuse and lack of accountability. To address these specific challenges, a community of industry experts under the Linux Foundation AI & Data Foundation created the Responsible generative AI framework (RGAF), which provides a practical, structured approach to managing responsibility in the development and use of generative AI (gen AI) systems. RGAF identifies 9 key dimensions of responsible AI, such as transparency, accountability, robustness and fairness. Each dimension outlines relevant risks and recommends actionable mitigation strategies.

RGAF complements existing high-level standards (such as ISO/IEC 42001:2023 and ISO/IEC 23894:2023, among others) by specifically focusing on gen AI issues, and it aligns with global policies and regulations to support interoperability and responsible innovation, based on open source principles and tools.

Deduction

No single framework addresses the full scope of AI security and safety. Instead, businesses must draw from multiple sources.

By blending these perspectives, organizations can create a holistic, defense-in-depth strategy that leverages existing cybersecurity investments while addressing the new risks posed by AI.

Navigate your AI journey with Red Hat. Contact Red Hat AI Consulting Services for AI security and safety discussions for your business.

![[keyword]](https://learnxyz.in/wp-content/uploads/2026/02/Measure-business-impact-with-Dashboard-and-Analytics.jpg)